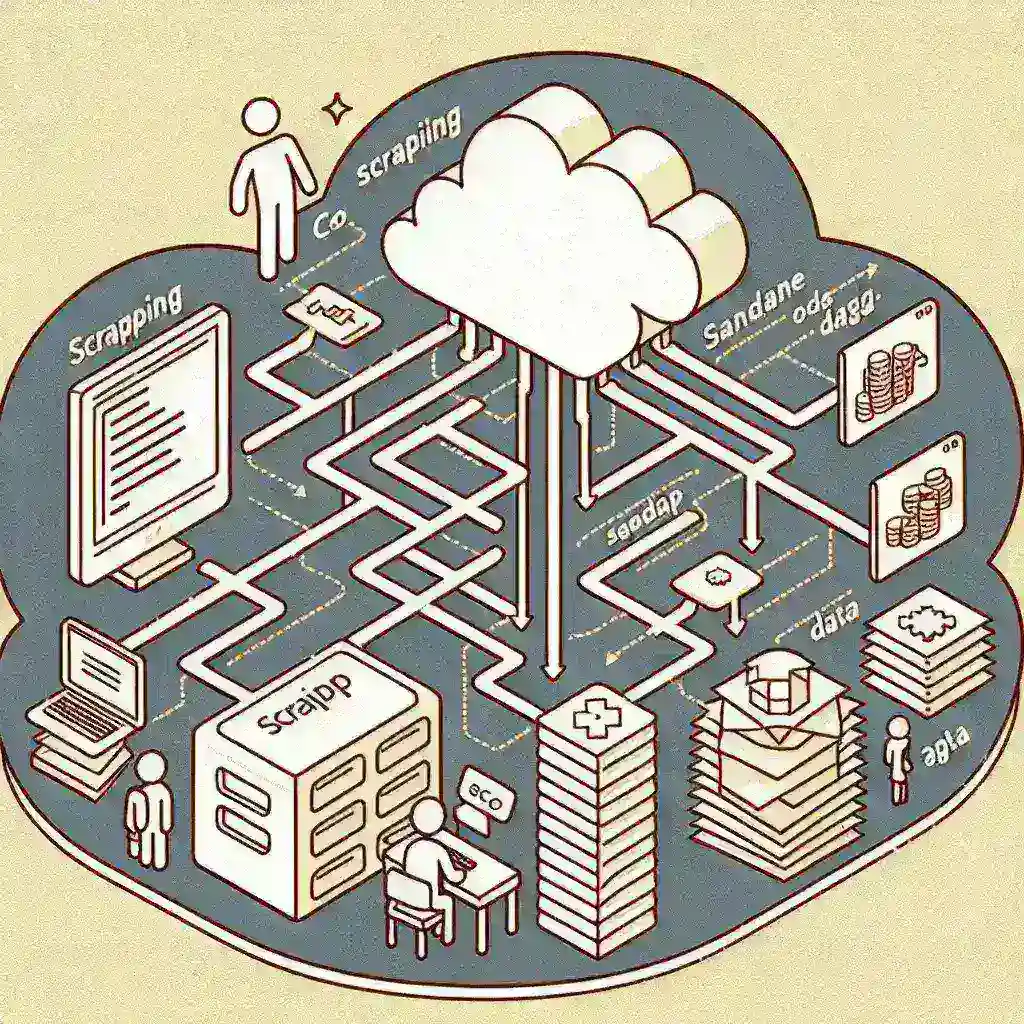

In today’s data-driven landscape, web scraping has become an essential tool for businesses seeking competitive intelligence, market research, and automated data collection. However, traditional server-based scraping approaches often present challenges in terms of scalability, cost management, and maintenance overhead. Enter serverless architecture – a revolutionary approach that’s transforming how we think about running scraping operations.

Understanding Serverless Architecture for Web Scraping

Serverless computing represents a paradigm shift where developers can execute code without managing the underlying infrastructure. In the context of web scraping, this means your scraping jobs can run on-demand, scale automatically, and only consume resources when actively processing data. Major cloud providers like AWS Lambda, Google Cloud Functions, and Azure Functions have made this approach increasingly accessible and cost-effective.

The serverless model operates on an event-driven basis, making it particularly well-suited for scraping tasks that need to respond to triggers such as scheduled intervals, API calls, or data changes. This architecture eliminates the need for always-on servers, reducing operational costs and complexity while providing virtually unlimited scalability.

Key Benefits of Serverless Scraping

Cost Efficiency and Resource Optimization

One of the most compelling advantages of serverless scraping is its pay-per-execution pricing model. Traditional server-based solutions require constant resource allocation, even during idle periods. With serverless functions, you only pay for the actual compute time used during scraping operations. This can result in significant cost savings, especially for sporadic or variable workloads.

The automatic scaling capabilities ensure that your scraping jobs can handle sudden spikes in demand without manual intervention. Whether you’re scraping a few pages or thousands simultaneously, the serverless platform adjusts resources dynamically to meet your requirements.

Simplified Deployment and Maintenance

Serverless platforms abstract away server management, operating system updates, and infrastructure maintenance. This allows developers to focus entirely on the scraping logic rather than system administration tasks. Deployment becomes as simple as uploading your code, and the platform handles the rest.

Popular Serverless Platforms for Scraping

AWS Lambda: The Pioneer

Amazon Web Services Lambda remains the most mature and feature-rich serverless platform. It supports multiple programming languages including Python, Node.js, and Java – all popular choices for web scraping. Lambda integrates seamlessly with other AWS services like S3 for data storage, CloudWatch for monitoring, and EventBridge for scheduling.

Lambda functions can run for up to 15 minutes, making them suitable for most scraping tasks. The platform also provides robust error handling and retry mechanisms, essential for reliable data extraction operations.

Google Cloud Functions: Simplicity and Integration

Google Cloud Functions offers excellent integration with Google’s ecosystem, including BigQuery for data analysis and Cloud Storage for file management. The platform supports automatic scaling and provides detailed execution metrics, making it easier to optimize scraping performance.

Azure Functions: Enterprise-Ready Solutions

Microsoft Azure Functions provides enterprise-grade features with strong integration capabilities for organizations already using Microsoft technologies. The platform offers flexible hosting options and comprehensive monitoring tools.

Implementation Strategies and Best Practices

Designing Efficient Scraping Functions

When implementing serverless scraping jobs, it’s crucial to design functions that are both efficient and resilient. Each function should handle a specific scraping task, such as extracting data from a particular website section or processing a batch of URLs. This modular approach enables better error handling and easier debugging.

Consider implementing the following strategies:

- Stateless Design: Ensure your functions don’t rely on persistent state between executions

- Timeout Management: Design functions to complete within platform time limits

- Error Handling: Implement robust retry logic and graceful failure handling

- Rate Limiting: Respect target website limitations to avoid blocking

Data Storage and Processing

Serverless scraping jobs typically need to store extracted data for further processing or analysis. Cloud storage services like AWS S3, Google Cloud Storage, or Azure Blob Storage provide cost-effective solutions for raw data storage. For structured data, consider using managed database services that integrate well with serverless platforms.

Implementing a data pipeline that processes scraped information in real-time can add significant value to your scraping operations. Services like AWS Kinesis or Google Cloud Pub/Sub can help manage data streams efficiently.

Overcoming Common Challenges

Cold Start Latency

One notable challenge in serverless scraping is cold start latency – the delay that occurs when a function hasn’t been used recently and needs to be initialized. For time-sensitive scraping operations, this can impact performance. Strategies to mitigate cold starts include:

- Keeping functions warm through scheduled invocations

- Optimizing function package size and dependencies

- Using provisioned concurrency where available

Memory and Execution Time Limits

Serverless platforms impose memory and execution time constraints that may affect complex scraping operations. Design your functions to work within these limits by breaking large tasks into smaller, manageable chunks. Implement pagination and batch processing to handle extensive data extraction efficiently.

Network and IP Management

Some websites implement IP-based rate limiting or blocking, which can affect serverless scraping jobs that may use shared IP addresses. Consider using proxy services or VPN solutions to manage IP rotation and avoid detection.

Security and Compliance Considerations

When implementing serverless scraping solutions, security should be a top priority. Ensure that your functions follow the principle of least privilege, accessing only the resources necessary for their operation. Store sensitive configuration data like API keys and credentials in secure parameter stores or key management services.

Additionally, be mindful of legal and ethical considerations when scraping websites. Always review and comply with robots.txt files, terms of service, and applicable data protection regulations.

Monitoring and Optimization

Effective monitoring is essential for maintaining reliable serverless scraping operations. Most cloud platforms provide built-in monitoring tools that track function execution, errors, and performance metrics. Set up alerts for failures and unusual patterns to ensure prompt issue resolution.

Regular optimization of your scraping functions can improve performance and reduce costs. Monitor execution times, memory usage, and error rates to identify opportunities for improvement. Consider implementing caching mechanisms for frequently accessed data and optimizing your code for better resource utilization.

Future Trends and Considerations

The serverless landscape continues to evolve, with new features and capabilities being added regularly. Edge computing integration, improved cold start performance, and enhanced development tools are making serverless scraping even more attractive for organizations of all sizes.

As machine learning and AI technologies advance, we can expect to see more intelligent scraping solutions that can adapt to website changes automatically and extract more sophisticated data insights.

Getting Started: A Practical Approach

To begin your serverless scraping journey, start with a simple proof-of-concept project. Choose a straightforward scraping task and implement it using your preferred serverless platform. Focus on understanding the platform’s capabilities and limitations before scaling to more complex operations.

Consider using infrastructure-as-code tools like AWS CloudFormation, Terraform, or Azure Resource Manager to manage your serverless scraping infrastructure. This approach ensures reproducibility and makes it easier to deploy and manage multiple scraping functions.

Serverless architecture represents a powerful paradigm for modern web scraping operations, offering unprecedented scalability, cost efficiency, and operational simplicity. By understanding the platform capabilities, implementing best practices, and addressing common challenges, organizations can build robust and efficient data extraction solutions that scale with their needs. As the serverless ecosystem continues to mature, we can expect even more innovative approaches to emerge, further revolutionizing how we approach web scraping and data collection in the cloud era.

Leave a Reply